AI has become the new normal way of working, living and being productive.Unlike simple chatbots, AI agents can autonomously execute tasks, connect to business applications,

and make decisions on our behalf. They are the “co-pilots” that promise to free us from repetitive work

and help us scale operations.But here is the uncomfortable truth: if you mix the magic of AI Agents with fragile security foundations,

you do not get innovation, you get catastrophe.

The ShadowLeak Attack

Researchers recently uncovered a vulnerability called “ShadowLeak” that demonstrates

exactly what happens when AI and weak security collide.

Here is how the attack worked:

- A malicious email is crafted with hidden instructions

(text in white font, tiny characters, or invisible formatting). - The victim does not even need to click anything. If they use an AI Agent configured to

automatically summarize emails, the agent reads the booby-trapped message. - The hidden prompt tricks the AI Agent into exfiltrating sensitive data

such as PII or confidential company information and sending it to an attacker-controlled website. - The user never sees the hidden content, and the attack leaves almost no trace.

The user did not have to open, click, or approve anything.

The simple act of connecting an AI Agent to email gave the attacker a silent doorway into private data,

making it a zero-click attack.

Two Key Lessons

- Do not jump into AI blindly.

Every new technology carries risk. Before deploying AI Agents, businesses must map potential attack vectors,

evaluate their risk appetite, and implement mitigation strategies. Without this, you are effectively handing

the keys to your data to an autonomous system that can be manipulated. - Strong foundations matter more than fancy tools.

This attack exploited a classic malicious email. The real vulnerability was not the AI model itself but weak

cyber hygiene. If your organization struggles with phishing resilience, patch management, or identity controls,

adding AI Agents only amplifies your exposure. You cannot build advanced technology on shaky security.

Solid cybersecurity foundations such as email filtering, monitoring, incident response, and employee awareness

are the enablers of safe innovation.

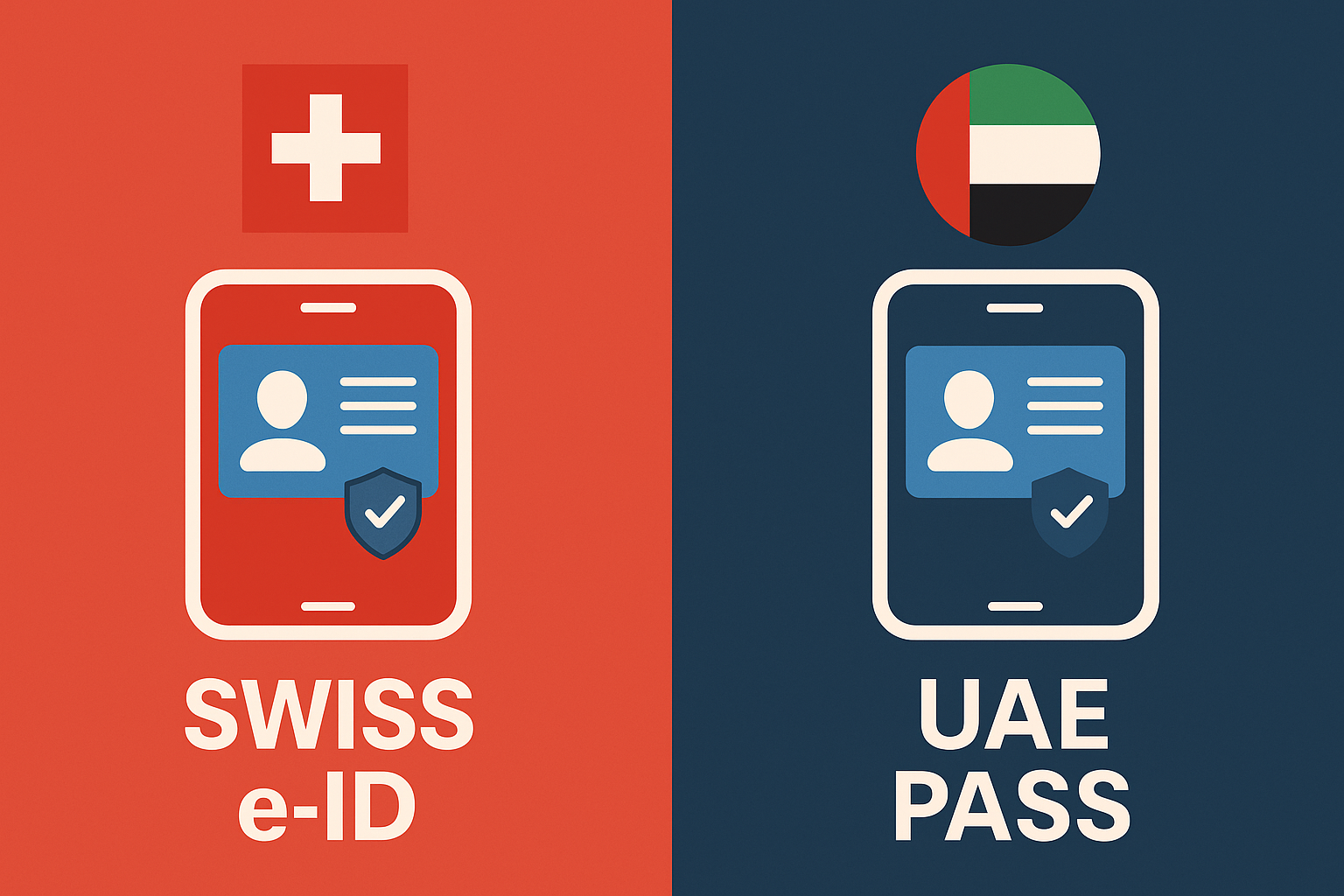

ZENDATA is here to support your organization

Our ZEN360 MSSP SOC service provides the strong cybersecurity foundation you need to safely

adopt and leverage transformative technologies such as AI Agents. Through our consultancy services, we help

you identify the risks associated with new technologies, develop a clear risk matrix, and define practical

mitigation options. This ensures that every innovation you deploy is backed by informed decision-making

and a calculated, controlled risk posture.